2024 国庆之后

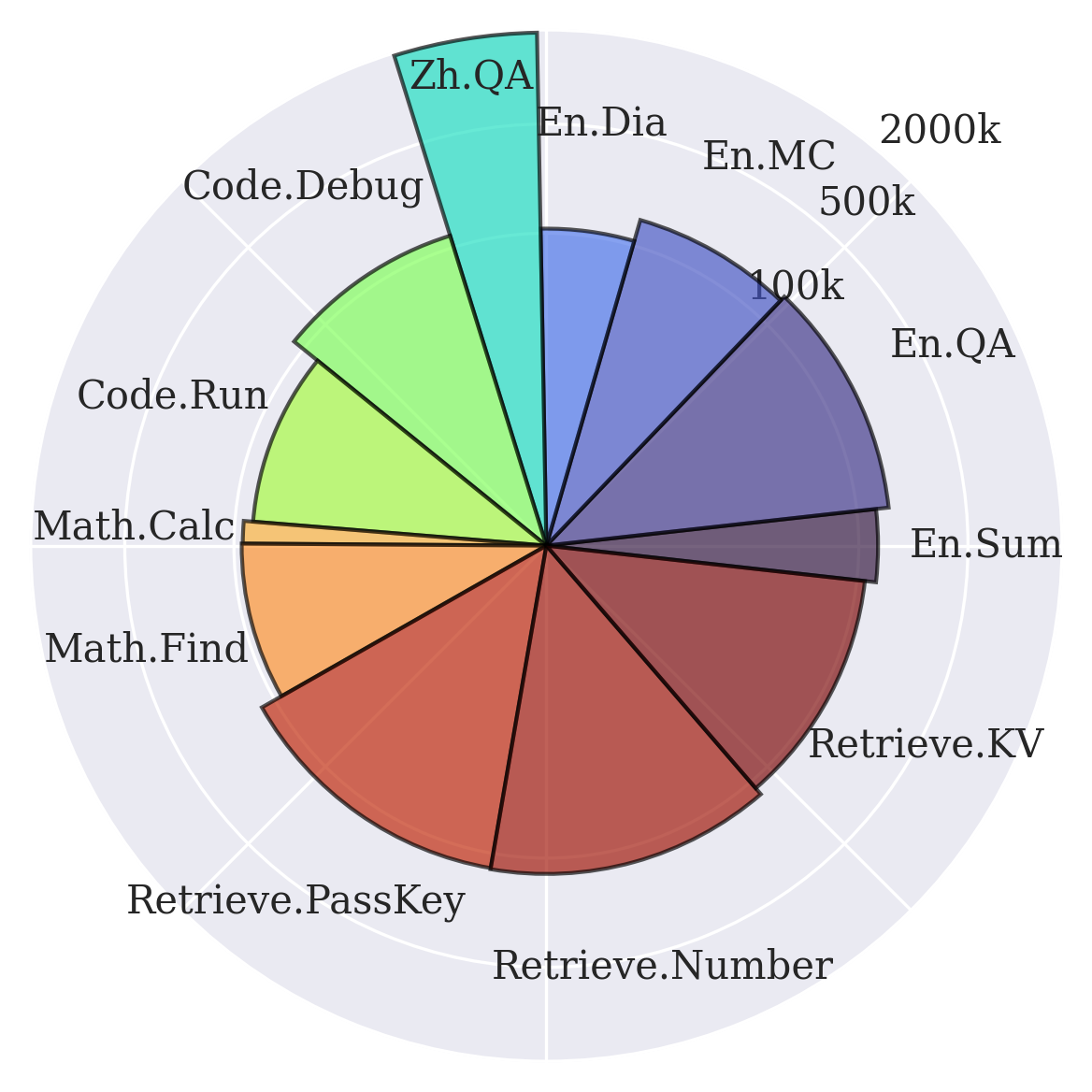

刚刚放完 🇨🇳 国庆假,从东莞回来了北京继续我的博士生涯。这几个月感觉事情特别多,虽然很充实,但也很累,刚好这个七天长假(实际只有五天)可以让我喘口气。很久没有写博客了,上一次关于我自己的博客内容好像就是去年国庆之后的。刚好过一年,也可以当作一个年度总结吧。 今年最主要的几件事情如下。 从硕士变成了博士。 女朋友毕业后,去了东莞工作,并且跟她一起建设了一个小家,也把娃基本都带过去了(现在我宿舍只剩下大白和两个猪)。 女朋友跟我一起回挪威见我的家长了,也是她第一次出国。 家人来了中国参加我的毕业典礼,顺便和女朋友家人见了面,定了亲。 当上了实验室里的研究小组组长,参与了公司的运行,很有打工人的感觉。 认识了很多做科研的人,对科研的认知进步了超级多,也看了超级多论文,找到了自己喜欢的小领域,感觉得心应手,idea 也超级多。 当上了 NLP 课的助教,是一个很有意思的体验。 科研篇 去年暑假被一位学姐拉到导师公司坐着,因为环境好又有钱,然后后面就顺理成章跟她一起做了科研项目,进入了新的小组(刚好一直带我的学长也快要毕业了)。后面,好像是五月份左右,这边的小组组长因为要出去实习,让我当上了组长,感觉非常不一样,一开始压力还挺大的,也觉得自己能力不够,德不配位。但是其实还行,大家也都是为了做科研而已,就是多了很多跟别的组拉扯的情况。同时,来到这边之后找到了自己的新方向了:RNN 和长文本。特别喜欢这种,有点小众,同时还影响力挺大的研究方向,就是一开始看论文有点吃力,毕竟很多基础理论跟现在火热的 Transformer 有比较大的出入,研究难点也很不一样。但是这样才好,同行少一点,看论文的压力也少一点(顺便吐槽一下,现在论文真的太多了,每次放完假都觉得错过了无数篇论文!)。另外,这段时间也把楚简论文投了 ARR,评分不是很好最近就改投 COLING 了。同时也挂了 arXiv,但是这种工作感觉影响力就不是很大,虽然也是首个相关数据集,肯定能拿到一些引用的。感觉我数据集的工作还挺多的,哈哈哈哈哈。 同时这段时间还结束了之前一直做的工作,比如知识编辑的工作中了 COLING。我作为二作的 $\infty$-Bench 和双工交互模型也结束了,分别中了 ACL 和 EMNLP,都挺不错的,引用也很不错,抱上大腿了哈哈哈哈。 博士开始 这个暑假后我从硕士变成了博士了,名义上是普博,但是感觉在我实验室的人眼中我就是直博的。把中文和古文字相关的工作都放到硕士论文里面了,我的博士论文就是 long-context 和 continual learning 了。感觉也挺好的,喜欢这种环境的变化,感觉可以让我有点重获新生、保持新鲜感的感觉。同时还活的了新的宿舍,22 号楼,室友还是原来的。新装修的,环境不错,但是洗澡的地方有点恶心。 前几天 10 月 2 日投了 ICLR,Stuffed Mamba: State Collapse and State Capacity of RNN-Based Long-Context Modeling,是我感觉比较满意的一个研究工作,做了也很久,感觉影响力应该会不错。然后今天凌晨两点把它放到 arXiv,争取一下靠前一点的位置。后面会单独写一篇博客整理和介绍。但是这篇工作只是开胃菜,是一个关于模型记忆能力的探索和一些崩溃现象的分析,后面还是得做实际的模型改动来提高模型性能,这才是我向往代表性工作,但是还是挺难的,虽然说 idea 很多,但是机器学习的研究就是一个反复试错的过程,大部分结果还是会跟猜想有很大的出入的。老师想要我训一个很强的 Mamba 版 MiniCPM,但是我觉得不做结构上的改动的这种训练没有什么科学贡献,个人还是希望做科学贡献,哈哈哈哈哈。 生活篇 之前最后一年跟 00 在学校每天都会见面,玩耍。 国庆结束后没多久我们就 10 月 27 日到 31 日一起去了东莞参观公司,感觉环境很不错,就是东莞这个城市很破旧,人均素质也挺低。没办法。29 日去了深圳玩,见了已经工作了的于泽华和 00 的堂姐。11 月 17 日,跟 00 去了孝感市的安陆市参加她高中同学,金洁,的婚礼,好羡慕人家可以这么早结婚。但是习俗确实好麻烦……后面 00 找了个实习,是【比特大陆】,在丰台区,中关村壹号对面,离我们实验室相关公司的【启元实验室】挺接近的。有时候我也会去启元上班,然后就可以一起下班了。 ...